Last night was supposed to be a normal one — just pushing a few commits before sleeping — and then GitHub suddenly stopped loading. At first I thought my internet was acting up again, but nope, everything else was working perfectly. GitHub, on the other hand, just showed a plain white page with a big 504 Gateway Timeout message sitting there like a troll.

And the funniest part? It happened right when I actually had motivation to work.

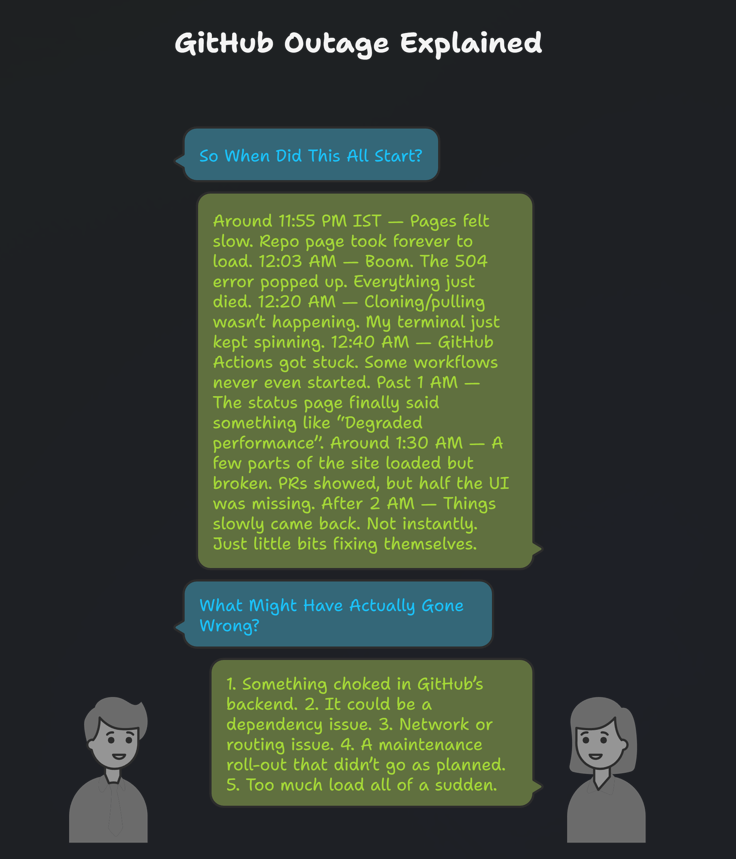

So When Did This All Start? (My Timeline)

I’m writing this from how things happened on my screen, and from a bunch of developer posts I was reading while trying to figure out what broke.

- Around 11:55 PM IST — Pages felt slow. Repo page took forever to load.

- 12:03 AM — Boom. The 504 error popped up. Everything just died.

- 12:20 AM — Cloning/pulling wasn’t happening. My terminal just kept spinning.

- 12:40 AM — GitHub Actions got stuck. Some workflows never even started.

- Past 1 AM — The status page finally said something like “Degraded performance”.

- Around 1:30 AM — A few parts of the site loaded but broken. PRs showed, but half the UI was missing.

- After 2 AM — Things slowly came back. Not instantly. Just little bits fixing themselves.

This was probably one of the biggest hiccups I’ve personally seen from GitHub in a while.

What Might Have Actually Gone Wrong?

GitHub hasn’t officially explained the root cause (they rarely give a detailed one), but based on the way everything behaved last night, a few things are pretty likely.

1. Something choked in GitHub’s backend

A 504 almost always means one service upstream didn’t reply in time.

When that happens, the load balancer just gives up and throws the error.

2. It could be a dependency issue

GitHub is basically hundreds of microservices talking to each other.

If even one of those “core” ones slows down — like auth, repo indexing, or metadata — the whole chain collapses. It’s like one guy in a relay race tripping and everyone else smashing into him.

3. Network or routing issue

Sometimes one bad routing update can mess up everything globally.

Cloudflare or internal edge routing is usually the first suspect in big outages.

4. A maintenance roll-out that didn’t go as planned

This happens ALL the time in big systems.

You push an update, everything looks fine, then 15 minutes later some downstream service starts failing and… chaos.

5. Too much load all of a sudden

Maybe too many Actions workflows triggered at once, or traffic spiked.

When the platform hits its limits, these timeouts happen.

(These are educated guesses, but honestly, every developer knows these are the usual suspects.)

How Developers Reacted (The Fun Part)

The moment GitHub goes down, developers don’t panic — they turn into comedians.

Here are some of the things I saw:

- “So GitHub is down. Guess I’m done for the day.”

- “I can’t push my code, now nobody will believe I actually worked.”

- “Deployment stuck. I’m stuck. Everyone’s stuck.”

- “This is my sign to sleep.”

- “GitHub going down during my deadline is like nature telling me to quit.”

And of course, memes everywhere.

A surprisingly productive number of memes.

Reddit, Twitter/X, and even random Telegram groups were full of people refreshing the status page like it was a cricket score.

What I Learned From This (again)

Every time a big platform goes down, you get a small reminder that even the most powerful tech companies with insane infrastructure can still break for an hour. No one is untouchable.

GitHub eventually fixed everything, and by early morning things looked normal again.

But for a couple of hours, developers around the world were basically… on a forced break.